Keeping up in an industry that evolves as fast as AI is quite a task. So until an AI can do it for you, here’s a handy roundup of the past week’s stories in the world of machine learning, along with notable research and experiments that we’ve not covered alone.

If it wasn’t already obvious, the competitive landscape in AI – particularly the subfield known as generative AI – is red-hot. And it’s getting hotter. This week, Dropbox launched its first corporate venture fund, Dropbox Ventures, which the company said would focus on startups building AI-powered products that “shape the future of work.” Not to be outdone, AWS debuted a $100 million program to fund generative AI initiatives led by its partners and customers.

There’s certainly a lot of money being thrown around in the AI space. Salesforce Ventures, the VC division of Salesforce, plans to invest $500 million in startups developing generative AI technologies. Business day recently added $250 million to its existing VC fund specifically to support AI and machine learning startups. And Accenture and PwC have announced they plan to invest $3 billion and $1 billion respectively in AI.

But you may wonder if money is the solution to the big challenges in the field of AI.

In an illuminating panel at a Bloomberg conference in San Francisco this week, Meredith Whittaker, the president of secure messaging app Signal, argued that the technology underpinning some of today’s most popular AI apps is becoming dangerously opaque. She gave an example of someone walking into a bank and asking for a loan.

That person may be denied the loan and have “no idea there is a system to it [the] back, probably powered by some Microsoft API that determined, based on social media scrapes, that I wasn’t creditworthy,” Whittaker said. “I’ll never know [because] there is no mechanism for me to know this.”

It’s not the capital that matters. Rather, it’s the current power hierarchy, Whittaker says.

“I’ve been sitting at the table for about 15 years, 20 years. I have been at the table. Sitting at the table without power is nothing,” she continued.

Of course, achieving structural change is much more difficult than scrounging for money, especially when the structural change is not necessarily in favor of the ruling powers. And Whittaker warns what can happen if not enough counter-thrust.

As advancements in AI accelerate, so do the societal impacts and we continue on a “hype-filled road to AI,” she said, “where that power is entrenched and naturalized under the guise of intelligence and we are controlled to the point [of having] very, very little say in our individual and collective lives.”

That should give the industry a break. Whether really shall is another matter. That’s probably something we’ll hear discussed when she takes the stage at Disrupt in September.

Here are the other AI headlines from the past few days:

- DeepMind’s AI controls robots: DeepMind says it has developed an AI model called RoboCat that can perform a range of tasks on different models of robot arms. That alone is not particularly new. But DeepMind claims the model is the first to be able to solve and adapt multiple tasks and do it using different real-world robots.

- Robots learn from YouTube: Speaking of robots, CMU Robotics Institute assistant professor Deepak Pathak this week VRB on display (Vision-Robotics Bridge), an AI system designed to train robotic systems by viewing a recording of a human. The robot pays attention to a few key pieces of information, including contact points and trajectory, and then attempts to complete the task.

- Otter gets into the chatbot game: Automatic transcription service Otter this week announced a new AI-powered chatbot that allows attendees to ask questions during and after a meeting and collaborate with teammates.

- EU calls for AI regulation: European regulators are at a crossroads over how AI will be regulated — and ultimately used commercially and non-commercially — in the region. This week, the EU’s largest consumer organisation, the European Consumer Organization (BEUC), weighted by its own position: Stop dragging and “launch urgent studies now on the risks of generative AI,” it said.

- Vimeo launches AI-powered features: This week, Vimeo announced a suite of AI-powered tools designed to help users create scripts, record footage using a built-in teleprompter, and remove long pauses and unwanted disfluencies like “ahs” and “ums.” from the recordings.

- Capital for synthetic voices: Eleven Labs, the viral AI-powered platform for creating synthetic voices, has raised $19 million in a new round of funding. ElevenLabs picked up steam fairly quickly after launching at the end of January. But the publicity hasn’t always been positive – especially not once bad actors started exploiting the platform for their own purposes.

- Convert audio to text: Gladia, a French AI startup, has launched a platform that uses OpenAI’s Whisper transcription model to convert – via an API – all audio into text in near real-time. Gladia promises it can transcribe an hour of audio for $0.61, with the transcription process taking about 60 seconds.

- Harness Embraces Generative AI: armor, a startup creating a toolkit to help developers work more efficiently, injected its platform with a bit of AI this week. Now Harness can automatically fix build and deployment errors, find and fix security vulnerabilities, and make suggestions to get cloud costs under control.

Other machine learning

This week CVPR was in Vancouver, Canada, and I wish I could have been there because the lectures and papers look super interesting. If you can only watch one, check it out Yejin Choi’s keynote about the possibilities, impossibilities and paradoxes of AI.

Image Credits: CVPR/YouTube

The UW professor and MacArthur Genius grant recipient first covered some unexpected limitations of today’s most capable models. GPT-4 in particular is very bad at multiplication. It fails surprisingly quickly to correctly find the product of two three-digit numbers, although with a little coaxing it can get it right 95% of the time. Why does it matter that a language model can’t do math, you ask? Because the entire AI market right now is based on the idea that language models can be generalized well to a host of interesting tasks, including things like doing your taxes or accounting. Choi’s point was that we should look for AI’s limitations and work within, not the other way around, because it tells us more about their capabilities.

The other parts of her talk were equally interesting and thought provoking. You can view it in its entirety here.

Rod Brooks, introduced as a “slayer of hype”, gave an interesting history of some of the core concepts of machine learning — concepts that seem new only because most of the people applying them weren’t around when they were invented! He goes through the decades, touching on McCulloch, Minsky and even Hebb – showing how the ideas remained relevant well beyond their time. It’s a handy reminder that machine learning is a field that stands on the shoulders of giants dating back to the post-war era.

There are loads of papers submitted to and presented at CVPR, and it’s limited to look at just the award winners, but this is a news review, not a comprehensive review of the literature. So here’s what the judges at the conference found most interesting:

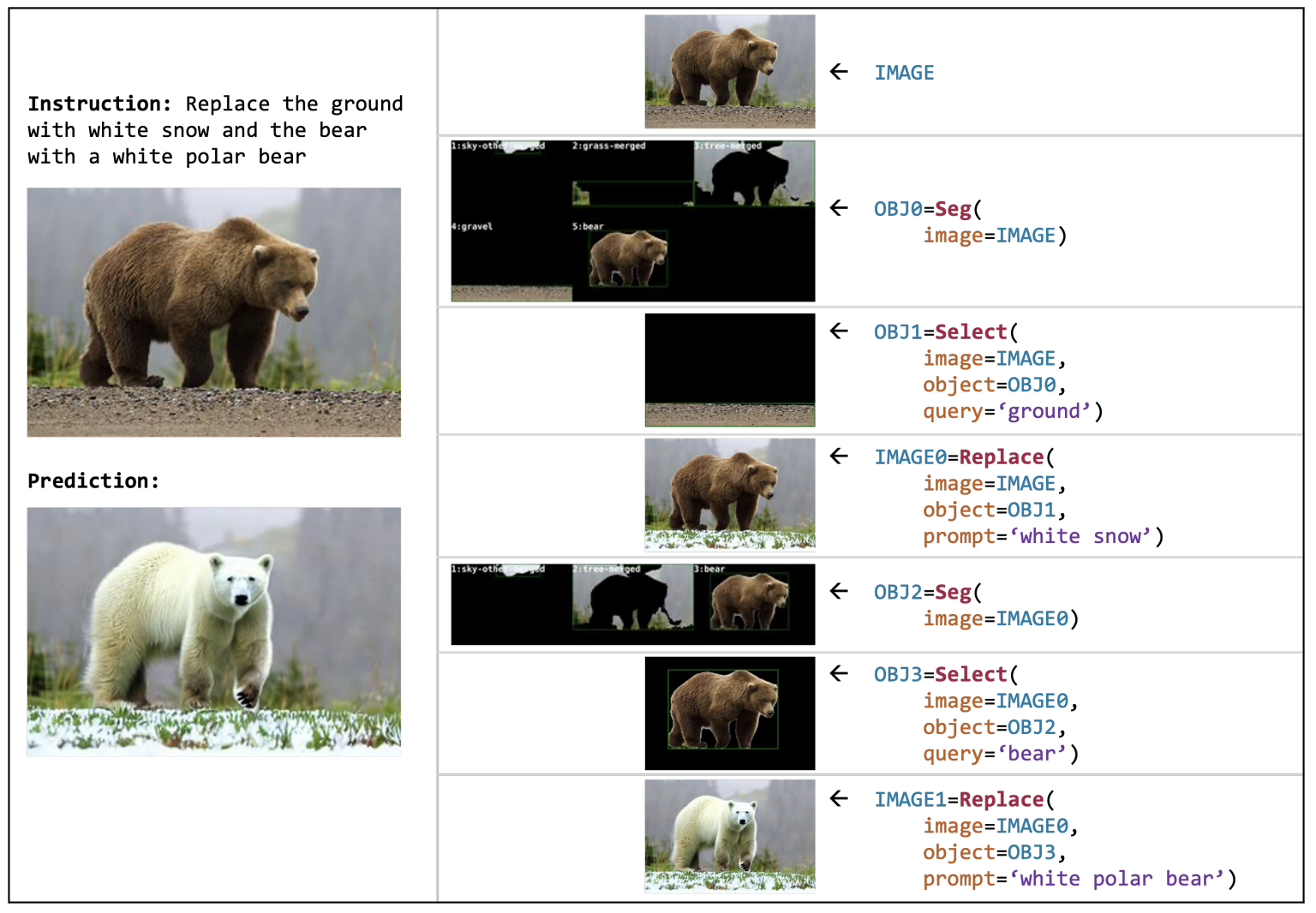

Image Credits: AI2

FISH PROG, from researchers at AI2, is a kind of meta-model that performs complex visual manipulation tasks using a multifunctional code toolbox. Suppose you have a picture of a grizzly bear on some grass (as pictured) – you can tell it to “replace the bear with a polar bear in the snow” and it will start working. It identifies the parts of the image, separates them visually, searches and finds or generates a suitable replacement, and intelligently stitches the whole thing back together without further prompting from the user. Blade Runner’s “enhance” interface is starting to look downright pedestrian. And that’s just one of many possibilities.

“Planning-oriented autonomous driving”, from a multi-institutional Chinese research group, tries to unify the different parts of the rather piecemeal approach we’ve taken to self-driving cars. Usually there is some kind of step-by-step process of ‘perceiving, predicting and planning’, each of which can have a number of sub-tasks (such as segmenting people, identifying obstacles, etc.). Their model tries to put all of these into one model, kind of like the multimodal models we see that can use text, audio, or images as inputs and outputs. Likewise, this model simplifies in some ways the complex interdependencies of a modern autonomous driving stack.

DynIBaR shows a high-quality and robust method of interacting with video using “dynamic neural radiation fields” or NeRFs. An understanding of the objects in the video allows for things like stabilization, dolly moves, and other things you wouldn’t normally expect to be possible once the video has already been shot. Again… “improve.” This is definitely the kind of thing Apple hires you for, then takes credit for at the next WWDC.

Dream Booth you may remember a little earlier this year when the project page went live. It is the best system yet for creating deepfakes. Of course, doing this kind of image editing is valuable and powerful, not to mention fun, and researchers like those at Google are working to make it seamless and more realistic. Consequences… maybe later.

The award for the best student paper goes to a method of comparing and matching meshes, or 3D point clouds — frankly, it’s too technical for me to explain, but this is an important capability for real-world observation and improvements are welcome. View the paper here for examples and more information.

Two more nuggets: Intel showed off this interesting model, LDM3D, for generating 3D 360 images such as virtual environments. So if you’re in the metaverse and you say “put us in an overgrown ruin in the jungle” it just creates a new one on demand.

And Meta released a speech synthesis tool called Voicebox that’s super good at extracting attributes from voices and replicating them even if the input isn’t clean. Usually for voice replication, you need a good amount and variety of clean voice recordings, but Voicebox outperforms many others, using less data (think 2 seconds). Luckily, they’re keeping the genie in the bottle for now. For those who think they might need to have their voice cloned, check out Acapela.